Deepfakes—synthetic audio, video, or image content generated through machine learning—are increasingly being repurposed for fraud. While early examples focused on entertainment or misinformation, analysts have documented a sharp rise in their use for impersonation in financial contexts.

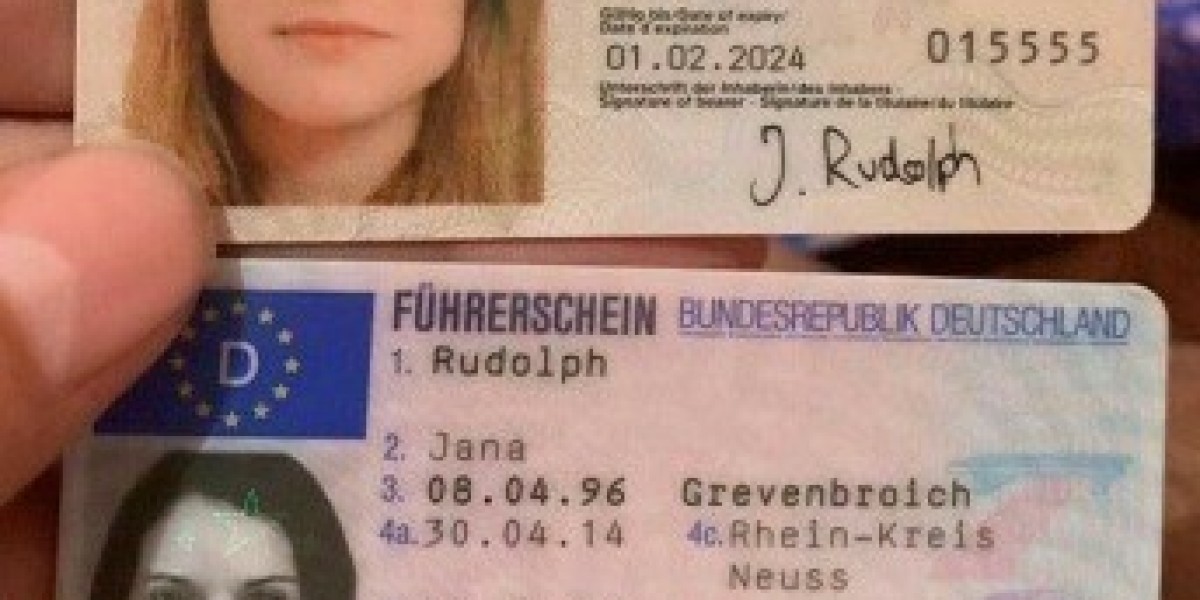

According to multiple threat briefings, deepfakes now appear in business email compromise (BEC) variants, investor scams, and synthetic KYC (Know Your Customer) submissions. Unlike traditional spoofing, deepfakes exploit visual and auditory trust cues that authentication systems struggle to verify.

Recent statistics from the ncsc indicate that reported deepfake-enabled fraud attempts have grown year-over-year, though confirmed financial losses remain difficult to quantify. The disparity suggests that detection is improving faster than exploitation—but only temporarily.

Data Gaps and Measurement Challenges

Evaluating deepfake-related losses requires separating confirmed incidents from suspected ones. Financial institutions rarely disclose full case details due to reputational and regulatory constraints, and many victims cannot prove that manipulated media directly caused their losses.

Analysts therefore use proxy indicators—growth in impersonation reports, forensic detection software adoption, and correlated phishing data—to approximate scale. Estimates vary widely. Some private cybersecurity studies suggest deepfake involvement in 2–3% of digital fraud reports in 2024, while others find less than 1% with verifiable evidence.

The cautious conclusion: deepfake scams are statistically minor today but growing exponentially in capability and visibility. Data uncertainty justifies attention, not alarm.

Comparative Context: Old Tools, New Mediums

Historically, fraud schemes evolve through improved mimicry. Email spoofing forged sender addresses; voice phishing simulated official tone; now, deepfakes synthesize entire personas. The underlying vector—trust manipulation—remains constant.

When comparing across eras, deepfakes represent a qualitative leap in credibility rather than a quantitative explosion in cases. Traditional phishing still dominates total financial fraud volume by orders of magnitude. Yet deepfakes hold unique potential to automate high-value deception, such as fraudulent fund transfers authorized by impersonated executives.

A recent benchmark analysis by ncsc classified deepfake-enabled fraud as “low frequency, high impact.” That risk profile mirrors early ransomware—rare at first, then rapidly industrialized once profit models stabilized.

Case Study Trends and Early Indicators

Most verified incidents fall into two categories: corporate impersonation and consumer investment scams.

1. Corporate impersonation: Fraudsters clone a senior leader’s face or voice to request urgent wire transfers. In one documented case, an audio deepfake convinced a regional manager to authorize a six-figure transaction within minutes.

2. Consumer investment fraud: Attackers create realistic promotional videos featuring fabricated endorsements or deepfaked influencers to market fake crypto platforms.

Across both cases, time-to-detection averaged less than two days in 2024 datasets, showing that alert staff and automated monitoring systems still mitigate losses when escalation channels exist. However, as generative AI quality improves, detection latency is expected to widen.

Comparing Detection Techniques

Detection methods vary in accuracy and accessibility.

· AI-based classifiers trained on artifact analysis can flag deepfakes with reported accuracy above 90% under controlled conditions. In live deployments, performance drops below 70% due to compression and environmental noise.

· Human verification protocols, such as secondary contact validation, remain more reliable for now but less scalable.

· Cross-channel correlation, where voice, email, and video metadata are compared, offers promising hybrid defense.

The trade-off is cost versus coverage. Enterprise systems can integrate multiple detection layers; smaller firms often rely on user awareness. For individuals, education remains the most practical defense.

Economic Impact Modeling

Quantifying potential losses requires modeling based on adoption curves of previous digital fraud innovations. When email phishing matured, total losses grew by roughly 400% over five years before plateauing as filters improved. If deepfake scams follow a similar trajectory, analysts project global annual losses in the low billions within a decade—significant but not catastrophic compared to total cybercrime estimates.

Importantly, the cost-to-create curve favors attackers: open-source tools can generate convincing deepfakes with minimal expense. Defensive technologies, by contrast, require specialized infrastructure and ongoing calibration. This asymmetry mirrors early malware economics.

Policy and Regulatory Developments

Governments are beginning to integrate deepfake-specific clauses into existing fraud and identity laws. Some jurisdictions classify synthetic media used for deception as aggravated fraud, increasing penalties. Regulatory bodies, including those cited by ncsc, advocate for shared intelligence frameworks that merge cybercrime reporting with audiovisual forensics.

The broader objective is systemic Cybercrime Prevention—embedding media authenticity verification into payment authorization, customer onboarding, and compliance workflows. However, implementation remains uneven. Cross-border data sharing challenges hinder coordinated enforcement, allowing transnational scammers to exploit legal gray zones.

Industry Response and Tool Maturity

Financial institutions are experimenting with real-time deepfake detection APIs and authentication “liveness checks” in video KYC. Early adopters report false positive rates near 10%, acceptable for screening but not final verification. Integration with behavioral biometrics—typing rhythm, device motion, contextual location—promises greater reliability.

Meanwhile, large technology platforms are piloting watermarking for synthetic media. Success depends on adoption breadth: if only legitimate actors use watermarking, criminals gain a clear incentive to omit it. A parallel can be drawn with HTTPS—universal adoption, not selective use, made it meaningful.

Comparative Risks Across Sectors

Not all sectors face equal exposure. Financial services and corporate treasury departments are prime targets because they combine authority with liquidity. Public-sector agencies face reputational rather than monetary harm. Retail consumers encounter smaller scams but in higher volumes.

Data from ncsc suggest that enterprises with mature incident reporting structures detect deepfake fraud roughly twice as quickly as those without dedicated response teams. This reinforces the link between procedural readiness and resilience.

Outlook: Balancing Awareness and Automation

In the near term, hybrid defense strategies—machine detection plus human verification—will define best practice. Over the next five years, expect convergence between fraud analytics and multimedia authenticity tools, turning Cybercrime Prevention into an integrated discipline spanning financial, reputational, and behavioral safeguards.

Still, technology alone cannot neutralize deception. Deepfake financial scams exploit trust as much as code. The long-term solution lies in cultivating informed skepticism—verifying voices, cross-checking video requests, and maintaining multiple communication channels for sensitive transactions.

As with every technological leap, the balance between risk and readiness will hinge on collaboration among regulators, analysts, and end users. If transparency and education scale alongside innovation, the next phase of digital finance may still prove safer than the one we inhabit now.